Cloud Solutions with Microsoft Azure

Microsoft Azure is an ever-expanding set of cloud services for building, managing, and deploying applications on a global network using your preferred tools and frameworks. In this Office Hours, RPI Consultants will provide live demonstrations with interactive Q&A of Microsoft Azure, including the Azure Portal for Infrastructure, Platform, and Software-as-a-Service. We will also share types of databases and content storage available, and how you can utilize Azure for cold storage of content in your OnBase or ImageNow system.

Transcript

John Marney:

Let’s go ahead and get started here. I’m gonna share my webcam really quick. All right. Can everyone hear me? If you wouldn’t mind, Mason, if you could throw a question into the question panel. Make sure you can hear me.

Mike:

Hey, John, it’s Mike, we can hear you.

John:

Oh, sweet. Okay. Thanks. All right. Well, thanks for joining today. Today we’re doing another RPI Consultants Office Hours. We do have a number of these also scheduled coming up, I think every two weeks is what we have. I’ll show you the schedule of those herein, just a minute. Today we’re going to be talking about cloud solutions with Azure. Azure is Microsoft’s, of course, their humongous cloud platform.

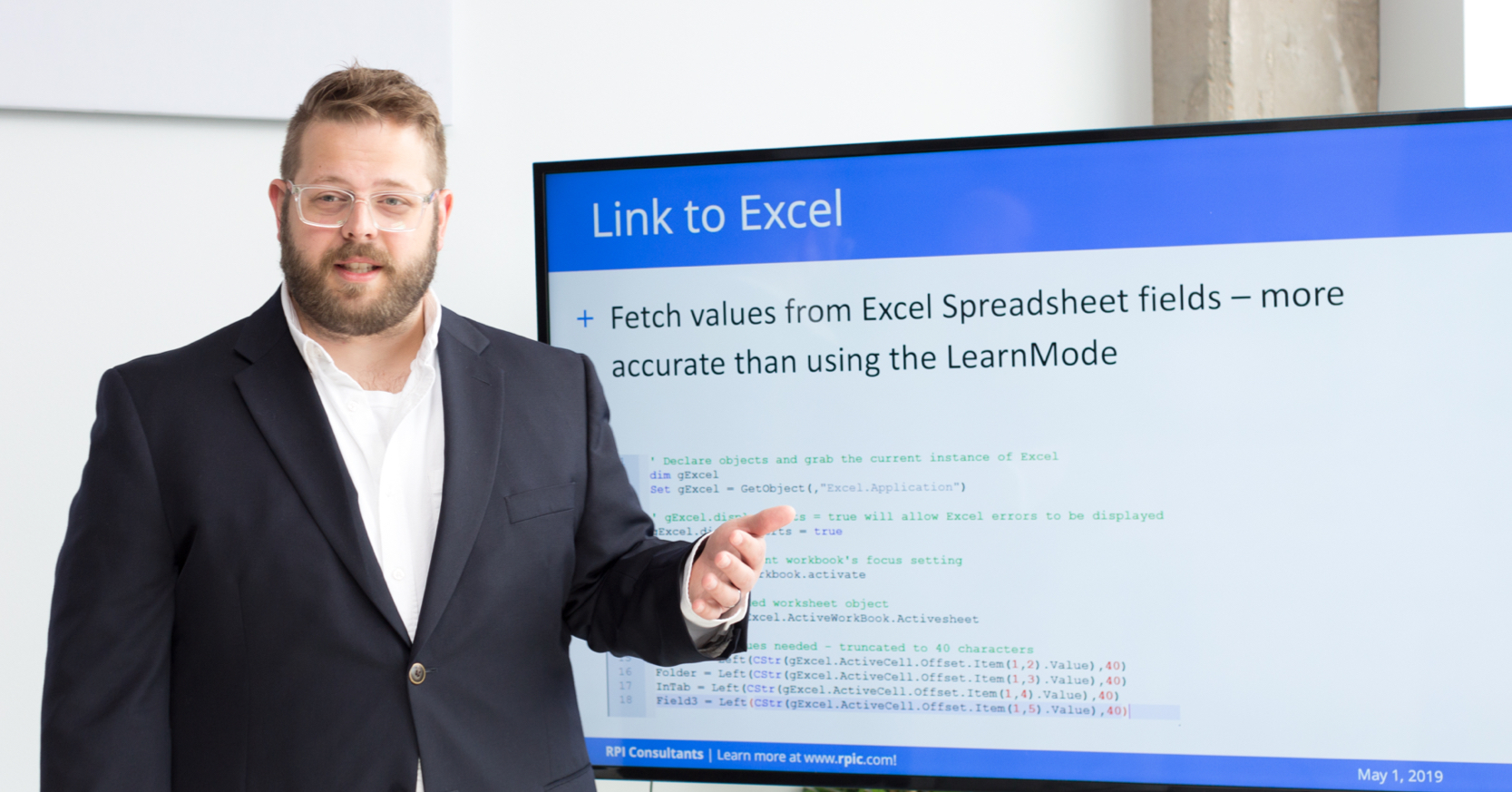

Our upcoming webinars and Office Hours, we have quite a bit of content prepared over the next couple of months. For Office Hours, the next couple we’re going to be discussing robotic process automation. The first one is going to be an introductory course. We’re going to show how to manipulate some Excel files, pull data out of it, because Excel is so commonly used or needed, RPA is commonly needed with Excel-based processes, but it’s a great place to start.

Later on, in May, we’re going to be doing a little bit more advanced RPA course for showing some system integrations and whatnot. It’ll build on the first one, but even if you can’t make it, no problem, but if you join us, it’ll be really interesting. We’re also going to be discussing something really on the forefront right now with also working from home or working remotely. On May 15th we’re going to do digital signatures or digital workflows. These are the easy to set up ones, we’re talking DocuSign or Adobe Sign. We’re going to be discussing those individually as well as how you can integrate those with some existing platforms that you may be using. That’s the Office Hours.

Now, we’re going to be doing more high-level webinars on those digital signing solutions. That’s on May 6th, and then we’re going to be doing also an overview of leveraging your Office 365 Investment or what to do with your SharePoint on May 6th as well. A little bit about me. Everyone probably has all this information but I’m the manager of Content and Process Automation practice, so that’s CPA practice. We’re not accountants but we do work with accountants here at RPI Consultants.

I’ve been doing this for around 10 years. I’ve been with RPI for around six years and much of my experience has been around accounts payable automation but really more broadly process automation as a whole. Lately, in the past year, year and a half, I’ve been spending a lot of time building competency with the Azure Cloud Services. I’m excited for RPI to be able to offer more services in this space.

Microsoft Azure Cloud Services. What are we talking about? Of course, please in these Office Hours, we encourage questions, we encourage audience participation. Please feel free to challenge me to explore something that I didn’t go into enough detail for or request to see something that I wasn’t even planning on talking about. Wherever these Office Hours take us, we are happy to do. What is Azure? A couple of slides really quick and then we’re going to jump in. Obviously, it’s the cloud, and what is the cloud? Cloud is a series of data centers. You can build, deploy, manage servers, manage applications.

Really what we’re talking about are the different levels of infrastructure as a service, platform as a service, and software as a service. Software as a service is things like Office 365. All you have to be concerned about is managing the application configuration and user access. Platform as a service is more like, and we’ll dive into this, but where the server is owned for you, but you may still be owning the application, install some level of code deployment, things like that. Infrastructure as a service is really just your servers on someone else’s hardware or your storage on someone else’s hardware. That’s really what we’re going to be exploring today.

Main reason why we dive into infrastructure as a service first is because to adopt it, it’s the easiest thing to adopt, to get your foot in the door of leveraging a cloud service provider, and it utilizes what is called the lift and shift methodology or can utilize it, where you’re picking up what you have in place today, shifting it over to a cloud-hosted environment. The data centers and cloud services, in general, are considered safe, secure, and reliable, and some of that is inherent and some of that are in the services that are provided. Of course, Azure is not the only cloud service provider, Amazon, Google, IBM, you have a lot of options. I think that the main reason that we have adopted Azure is because as an organization we began making our own move to Office 365 where we have moved, a long time ago, and it opened up the door for some really natural efficiencies and it became easy to explore what Azure had to provide, and all of a sudden we found the power of it, and I really want to share that with everyone else.

What can you do? Well, today we’re going to set up some cloud storage using what’s called a storage account. We’re going to explore the options inside of that. I’m going to go to a local RPI server that’s hosted in our Baltimore data center, and I’m going to map a drive that’s on Azure into our local on-premises server. That’s where I’m exhibiting that lift and shift idea where I’m going to take content or storage that would normally be on an on-premises server and push it into the cloud for reliability and the fact that it’s actually cheaper to store it out there than it is on our high-performance solid-state.

What can you do? The idea is that we’re demonstrating the ability to migrate expensive storage and hopefully realize a very quick return on investment. The type of storage I’m setting up costs, dollars a month that is, for gigabytes of storage. That is the extent of my presentation, so I’m going to go ahead and get logged into Azure portal. The place you go to set up, first thing we’re going to do is set up our storage account inside of Azure. The place you go to for anything inside of Azure is portal.azure.com.

If you don’t already have some sort of Microsoft account or subscription or within your organization, you don’t, no problem, you can set up a free trial subscription all by yourself. Microsoft offers, I believe, 60 days for most services, and then even after you move to a pay as you go, you can generally set up free or extremely cheap subscriptions for a lot of different things if you want to continue to sandbox.

First thing that you’ll see is it takes you to these services, and there’s just a ton of stuff. If I go to create a new resource, there are a ton of different options, I can spin up a virtual machine, I can create new users inside of my active directory, I can create new directories inside of my environment. The top-level inside of Azure are your subscriptions, so I have a subscription for our business and then everything that I’m building is already set up. I’m not going to dive into that, but you can have multiple subscriptions.

Within the portal you can see that there’s lots of different things you can do, I can spin up a SQL database, new Windows or Linux server, I can do some rather really cool things like leverage their OCR services, AI, that’s all very complex, what we’re going to do is create a storage account. I’m going to look up storage account, and storage account can store a number of different types of data. It can be traditional file store, it can be tables, so you can theoretically migrate from an existing traditional database, migrate your table storage over to a storage account.

Blobs, which are much more unstructured data. You can look up how a blob is defined but a blob is – what does it stand for – large object something, but it’s generally the binarization of images or of executables or anything like that that is a non-data type of storage, and there’s other types. We’re going to create a new one, and I’m going to walk through a couple of the options that are contained within the storage account creation. I have my subscription created. I have to select a resource group; resource groups are just logical groupings of different Azure components. I have a ton in here. I’m going to select just one we have that’s a playground or a sandbox for other things. I’m going to give this a name. It has to be a unique name within my instance. I’m going to call this WebinarDemo1. Get out. Lowercase letters and numbers. Okay. webinardemo1, there we go. I can specify location. Now, this location is the actual physical data center in which the storage is being used. Within the United States, I can’t say that it necessarily is going to matter for our purposes. Now, the amount of latency from New York to LA is going to be a lot more significant than Kansas City to LA or from Kansas City to New York. The selection of the data center that you use does matter.

There’s a lot to be learned about Azure and the fact that the geolocation of your storage, and that’s in the replication section down here, matters because certain data centers are paired with other data centers for disaster recovery purposes. For today’s demonstration, I’m just going to store in the central US, that’s close to me. If this is something where speed matters for accessing the data, you want to make sure you’re selecting a data center closest to wherever the access is coming from. If that’s distributed, we may need to look at setting up your storage to also be distributed. However, for this example, we’re going to set up storage in a central location.

Performance, you can look up the metrics that are behind these. For my purposes, standard performance is fine, premium is generally just faster disk access. The account kind, I’m not going to use blob storage. I could use storage v1. I’m going to use storage v2 because that’s the default. I’m not using blob storage because I need to store files in a traditional file system format for this demonstration. If you were just using blob storage, for example, lots of different medical like EMR applications or image archive from an ERP like SAP stores images in a blob. You could use blob storage for something like that.

Replication, this is fairly complex. You can look up the differences between these. I’m not going to dive into it, but these are the different– Basically, security layers, horizontally and vertically, for how your data is made secure both from disaster recovery and from high availability due to planned downtime. Planned, and unplanned. I’m leaving it as the default. Access tier, this is how frequently the data is going to be accessed. Again, cool data or the cold, it used to be called cold, this has changed since I last looked at it. The cool storage means that the less frequently it’s used it will be migrated to slower access up to a point.

Again, for my purposes, slower is fine. It’s not an extreme difference. What we’re talking about is generally fractions of a second to a second, but that’s per transaction. If I’m loading a single document, probably not that significant. If I’m loading millions of documents or anywhere between really thousands of documents, a second per document could be significant. That’s something to consider in the architecture of how this is actually done. Next, I’m going to move to networking. Connectivity method, I’m going to leave this public because you still have to authenticate against it even if it’s made public.

You can make it public to selected network, so I can make it public just to RPI’s network inside of Azure or private, which means I can specify or whitelist IPs. Really for your best security, this is probably what you would do in an enterprise scenario is make it private. However, again, for the purposes of demonstration, I’m going to leave it public. I’m not going to look at any of the advanced configuration. It’s going to validate all my inputs to make sure I have space allocated to my subscription on the server racks, et cetera. Most of this is invisible. Occasionally, you’ll run into an error if you are using up all of the space allocated to your account.

I’m an admin inside of my Azure subscription, so it’s generally not going to prevent me from doing things. However, if you are assigned the ability to go create storage accounts, your admin can create quotas or limits to what you’re allowed to create, and here’s we tell you whether you’re running into a problem. I’m going to go ahead and create this, and it’s really pretty fast. The deployment center pulls up. You can write scripts, or actually don’t even need scripts. There are automation methods in here to take templates and deploy virtual machines, storage account, SQL servers, all at once. While this is just one thing, and it’s going to finish here momentarily, in larger deployments you can have it running through quite a few different items. Deployment complete. Great. We have logged into our Azure portal. We selected to create a new storage account, gave it a name, gave it a few different options for high availability, redundancy, security, and the type of storage in terms of performance, and then created it. It was really that simple.

You could really run through this with all the defaults and have a storage ready to go for any given virtual machine. Next step. I want to take this to a server, and I’m doing it on a server for the purposes of connecting it to one of our enterprise applications, but you can do this on a desktop. I’m going to go to a server and actually mount this Azure storage as a share on that server.

You might think, “Oh, that sounds easy,” but there are a number of steps you have to do. You have to consider how we are authenticating against this storage because even though it’s public I can’t just plug in a URL and pull up the storage. I have to have an account authorized on the local machine that is also authorized against this storage account in Azure. Now, even on top of that, you might think, “Well, can’t I just add my active directory account in Azure to the storage account?” Sort of. “Can I add the account in Azure to my local machine?” Yes, and that’s what we’re going to do.

I’m going to pull up my virtual machine. Again, this is local. This is on-premises. As our first step in trying to migrate our environment over to cloud services, I’m going to actually take my ImageNow application in here. If you’re not familiar, ImageNow is storing document images. Inside of ImageNow, there are what are called OSMs, which are little chunks of disk space that contain different types of documents.

If I wanted to take my least accessed documents, maybe anything over three years old, for a certain department, maybe for finance, we’re not talking patient data or anything like that, and I wanted to migrate that to cheaper storage so that I’m not constantly trying to manage disk space on my server that’s at a premium and relatively expensive solid states and instead allow this auto growing Azure storage that’s extremely cheap, very infrequently accessed, this is a great expansion opportunity and really easy to implement. First, before I can do that, I’m going to mount the drive out to around the server so it can be accessed from the server.

Actually, I have a couple of scripts I need to copy over. The way that you’re going to do this is through PowerShell. PowerShell, if you’re not familiar, is a scripting language built into every server environment. You need to download PowerShell 6 or 7. 6 and 7 are the only ones enabled for Azure’s libraries. The default PowerShell on your server is 4 or 5 and you cannot natively run Azure commands from those.

I have a few scripts. The first thing that we’re going to do is I’m just going to load up one of these and– No, no, not that. You also want Visual Studio Code. Visual Studio Code is your development environment for PowerShell 6 and 7. I’m going to open a new terminal. This is basically just a PowerShell command prompt. I’m going to connect the account. This is going to do this part before we started, but that’s all right. Connect-AzAccount. This gives me a code that I have to go plug in on my subscription, or on the Microsoft website to connect our sessions, and this is not storing credentials on the server to be used late or anything. This is purely a login attempt from the shell so that I can begin running commands against Azure. I’m going to take that and pop back to my microsoft.com/devicelogin. Enter code, log in with my RPI account, and then it’s going to have me do– I don’t need my Two-Factor because I’ve already done recently. So, then I come back here and it says– Look, oh, I’m logged in now.

Then here’s what I have, I have a PowerShell script, which I can share, and this is really just built off the tutorial that Microsoft provides on their website, but I’m going to name the resource group that this was in and that was IMAGENOW7a. I’m going to name the– give the storage account name, and that was webinarstorage, I don’t remember now, webinardemo1. Then I’m going to give it a file share name. Now, this is the local share name, or at least the share name has access from this server, so you can name it whatever you want. We’re just going to call it webinarshare, and this is what will show up locally, again.

A couple of things before I execute this– Oh, and the last configuration is this actually mounted as a shared drive, so you have to specify the drive letter. I think I’m already using Z, which is what I set up when I was testing this previously. I think this one’s on Z. I think I use Y as well. I’m just going to make it X just for giggles. It just has to be any drive that’s not in use. Oh, there’s one step that I skipped, before I execute this I’ll pop back to other scripts. Yes, I authenticated against Azure using my credentials just to enable me to start running commands.

You do, however, have to store credentials, like I talked about, on this server that are actually used to access the Azure share, and what it’s going to do is actually store the user, that’s the same name as the account. It’s basically a service principle that Azure creates or uses on the fly. Again, I need to specify these two configuration parameters, webinardemo1. What is it going to do if you look in user accounts in your settings, open up user accounts, go to manage your credentials, and then windows credentials, you’ll see that it has a couple of things listed here under Windows credentials and these are the ones that I set up previously in proving this out.

Here’s where it’s going to store these credentials. If I open it up, I already have this from a previous share username, AZURE\osm4, password, persistence is set for enterprise. I don’t think that you can actually plug it in here because I think this has to be done through the PowerShell scripts because it actually has to fetch the user credentials from Azure before it can store it on the machine. I don’t even know what that password is. It’s not a user account that I’ve created.

I think that that’s really just a token that it uses and so the way that I do that is through this script that basically pulls down the storage account, pulls down the keys, which is essentially the token, and then adds it to command key, which is the same thing as adding it to these windows credentials right here. If I run this, what I should see is the webinardemo1 added to this list of windows credentials. Now, a couple of caveats before I execute this. One, I want to log in to the server, and I’m currently logged in as a user account called inowner, I believe, yes. I want to log into the server as the account that I want to store these credentials with.

These stored credentials are not universal to every user. There may be a way to make them universal, I haven’t explored that, but if I store them here and then log in as jmarney, my account, I will not see them. That’s important because if you have any enterprise application that need to access this data on the Azure storage account, that service or executable or whatever it is, is running with some certain permission level, and it needs to be the same account that is mapped, has the share map, and the same account that has these credentials stored.

Now, you can’t store the credentials across multiple local accounts. If you don’t do it right the first time, you can go do it again. Just to prevent unnecessary troubleshooting, I set it up originally under my account and then tried to run the service under a different account, and I struggled forever for why it wouldn’t let me access the data through the service account. Back to my script here, I’m going to execute this guy. I go to run and start debugging. The terminal says credential added successfully. Great. I don’t know if it’ll show up here until restart. There it is, webinardemo1.file.core.windows.net. That is the share.

Just to prove that, the address – Oh, no, it won’t be available yet. Sorry. Then I actually need to go back to my other script. Now, I can actually mount this share because I have stored the credentials for which we’re going to access it. These credentials do persist. You can run storage commands to be non-persistent so that as soon as the server is rebooted those credentials are started. Well, you don’t want that for these purposes. It will persist across reboots.

Again, I’m going to call this webinarshare. Just to prove this, I’m going to open it up in portal. I’m going to create– I go to storage explorer. I’m going to go to file shares, create file share. Name, share1. I’m not going to give it a quota, but you could so that when I pull this share down, I can see that in there, hopefully. This is ready to go. Should’ve mounted the X drive, although I don’t really care about that because I’m going to access it using the UNC anyway. Executing it. Again, if you have any questions, please feel free to submit them via the GoToWebinar panel.

File share not found. Oh, so maybe this does need to match with a share that is in here. File share so let’s try that, share1. Looks like that’s what it is. Apologies, I had that a little backwards. The file share name actually does need to match to a file share that you have created in the storage account. Before I go any further, another couple of considerations. This cannot necessarily be done from every operating system and every operating system version. There’s a matrix on Microsoft’s website.

Essentially, what you need is an operating system that supports– I believe the technology is called SMB. I believe the version is SMB 3 or 3.1. Essentially, what that allows is this unstructured Azure file storage to be mapped as a traditional file share on a Windows machine. For example, I believe Windows 7 can’t even do it. Windows Server 2003, not supported. Not that you should really be using Windows Server 2003 anyway. You do have to make sure that that is going to be supported before you get started. Now, number one, I should see this mounted as a X drive and I don’t. I’m not entirely sure why. It did show up as the share disk. Oh, there it is. Okay. It doesn’t put the drive letter first.

Now I do have access to the Azure share. If I want to do a quick test document just to store it up there, I’m going to do, test, test. I’m going to open that up, I’m going to say hello world. I’ll save it so that now back in my portal, I should be able to see the file, and look, sure enough, there it is. I don’t know if there’s actually a viewer. I think I would have to download it. Yes. Anyway, the purpose of the portal isn’t– you’re not really trying to access your portal through there anyway.

The short version of this demonstration is done. I’ve mapped the file share to the server. I can now begin storing files locally from my on-premises server to the cloud. I can use it as expandable disk space. I could do multiple different shares with different quotas each if I wanted to. There are a lot of options. I do have a stretch goal for this demonstration, and that is to show how we can actually begin migrating documents from my content store for Perceptive out to this Azure share. Before we do that, I’m going to take a peek to see if we have any questions. None yet. Again, if there’s anything you’d like to see that we can explore, happy to demo it. All right.

Just while I’m thinking about it, from my perspective, a lot of the enterprise applications that we work with rely on the document or content store, and so that’s really a logical place to begin a migration if you didn’t want to do a full migration VMs or even platform as a service all in one go. Another logical place to begin that expansion is your databases, or maybe even at step two because databases really are very storage centric.

There’s a couple of different ways you can do that. On the latest versions of SQL Server, you can elect to use what’s called a hybrid approach where you’re still managing the application on the local on-premises server but it connects an automatic – I think it does a lot of automatic building in leveraging of Azure storage accounts essentially to manage expanding storage, speed of storage, things like that. However, you could also elect to go ahead and fully migrate SQL Server up to Azure. Within the Azure portal, you can spin up a new SQL Server, and this really functions the exact same way that your on-premises version does.

You can still use SQL Server management console to your on-premises or on your computer management console to connect this Azure instance of your SQL database. The great thing about it is that the SQL databases in Azure have these so many different settings for automatic or the flexibility to scale up and down and in and out. They also allow for redundancy in a way that you really can’t replicate on-premises without a lot of effort.

You can see that. You can create a new SQL database right here in Azure. There’s not even a ton of different options. Although one thing that I’ll show you are the different basically prices or different models in which you can build your SQL Server. Option one and the more expensive but really for many purposes more necessary are your provisions, which means you’re reserving cores, you’re reserving RAM. You’re saying, “I’m carving up this little corner of this Microsoft data center for me to use for my SQL Server.” Again, it’s always provisioned for you so that you’ll never be without that hardware power. However, you will always pay for that hardware power even if you aren’t using it.

There’s different hardware configurations and whatnot that you can specify here, but essentially, what you’re saying is, “I need this much space and I need these many cores.” The other option and what you would typically, I think, want to use for less intense applications and is more expandable and a lot cheaper is your serverless option. Really what you’re saying here is, “Here’s what I want to pay up to, here’s what I want as my baseline and my minimum,” and Azure will autoscale the entire thing as needed.

Especially for something like a test or a development environment where the database, number one, a little bit of extra latency doesn’t matter as much, where the use is extremely inconsistent, and even when it’s used, the throughput is also extremely inconsistent. Unless you’re doing some sort of organized testing where you’re really pushing volumes to your system, I think that a serverless database is a no-brainer. In addition to that, these databases have autopause so that when they’re not being used, after a certain amount of time no transactions that it will go to pause.

Now, you have to keep in mind that if it’s just a database for an enterprise application, there’s almost always going to be some kind of heartbeat or consistent transactions taking place. As long as those transactions keep going from the enterprise app, the database is going to stay alive. This is really truly if the database itself has seen zero activity. So quick aside, that’s on the database side, a lot of options and I can definitely do a lot more exploration with you there on a different demonstration.

For now, what I’m going to do is finish up with my stretch goal of making sure that from Perceptive I can actually store documents out to this share and access them. First thing I need to do and am not going to walk through the entire demonstration of it, but I need to set up a new OSM. Again, just to show you the disk structure, there are three, ignore the other ones. 1, 2, and 3, three primary OSMs. 2 and 3 aren’t even used that heavily, 1 is my primary document store. If I dive in here, these are all obfuscated whatnot but ultimately these are just images. If I open this with– I don’t know what it is. It might be a PDF. It’s neither. That’s a bad example.

Open with, let’s see if Irfanview can figure it out. There, it’s a TIFF. It’s just a TIFF, it’s been obfuscated in the file system but here it is. I need to create a fourth OSM out on the share so that it can begin building the file system structure to actually store documents. I already have it set up. There are some command line things you need to do for Perceptive to actually do that. If anybody is interested, we can run through it, but just for the sake of demonstration, for now, I’m actually going to log into the SQL Server and just repoint that file path to our new share.

I’m already logged into my SQL Server and everything. IN_OSM_TREE_FSS is the table that stores the configuration for my OSMs. As you can see here I’ve got some environment variables, but OSM 1, 2, 3, 4, you’ll see that I have line 6, my OSM4, which you may have seen I already had mounted from proving this out previously. It’s referencing this UNC path out to my file share. I’m just going to edit this row really quick to point to our new share. There’s not enough space on the disk. When live demos go wrong. I’m going to have to clear out some space really quick. I don’t know what’s going on. Zero bytes free on the C drive. That’s great.

Whatever this is we don’t need it, that’s two years old. Lesson in doing proper server maintenance for your sandboxes. I don’t even think we have alerts set up on this. We have backups that are three years old, so we’re going to delete those too. That should free up some space. There we go. I’m just going to change this file path. The share was, again, webinardemo1. That was the server location and the share was share1. This is pointing to a directory that doesn’t exist yet. All I’m going to do is copy it over from the one that I already have set up just for demonstration’s sake, but I’m going to go and change this. Exit that and then back over on the other server, log into ImageNow, Perceptive. I’m going to navigate to where that – I had set up a couple of different files that were on the other cloud share in a workflow here.

What’s going to happen is since I haven’t migrated the actual objects yet, they are going to error when I try to open them. They’re going to say it can’t find the missing file. I think they will still do this without me restarting anything, so let me see. No, it’s still referencing the old file path, so let me restart the server really quick and it will reference the new path that I just updated. Starting the server process. I think that’s the only one that matters. I might just do OSM Agent. I think that one only handles deleting documents but let us just check.

As expected, it has taken the change that I put into the database, it’s referencing this new file path and it’s looking for its image at webinardemo1.file.core.windows.net\share1. I know it’s not there. I can navigate out to the share that I set up and I’m going to delete my test document. Delete my test document. There’s nothing in here, so of course, it’s not going to be there. There’s a couple of ways I can do this. I could migrate individual documents back and forth using command-line tools, but in a real-world scenario, I’m probably going to try and take OSM chunks and shift them over, so it might just be a straight copy.

I’m going to open up OSM4, which is where I had this set up previously. If I dive into the file system here, I can see that there’s a couple of images in here. The one that I’m after is actually this one, Multipage TIFF Example 1. All I’m going to do is copy over this entire structure into my new share. I’m lifting it off one in Azure share and pointing it to another. I paste, it writes in. Notice this is not incredibly slow. I selected the cold storage. Another thing worth pointing out is that a majority of the cost associated with Azure storage is in writing.

With applications like this where you write once per file most often and then it’s mostly reading, the reading is an extremely cheap– like a fraction of a cent per read if not even a fraction of a fraction. Again, once you do the first copy or the first storage, these things don’t tend to change a lot, so there’s a tremendous value, in my opinion. I migrated that whole OSM. That whole OSM is only these two images, these first two here in this queue. Again, it’s only those two. The third one is in the original OSM, local to this server.

That’s a PDF that’s an erroring. I’ve got other documents in here. Let’s see. That’s a form. I’m getting bitten by the sandbox bug again. There, image. This one’s loading up from the OSM1, which is local to this server. You see how fast that was, right? Open it. Image. Just like that. This image is maybe 50 kilobytes. See if we can tell. No, it’s actually 8 kilobytes. Should be fast anyway, it’s local. Just for comparison, the image that we’re going to open is 1.5 meg. We have another one that’s 182 kilobytes, so it’s a little closer. You’re going to see it’s a little slower to open. Theoretically, this should open now. This is the item I transferred over to our new share. There it is. That’s the 1.5 meg file or whatever.

In my opinion, that meets the criteria for acceptable use for a cold store document. I have not done any benchmarking to see what the different performance levels would be, but in my opinion, for infrequently accessed information, that’s more than acceptable. Then, the second document, this one was much smaller, 182 k. That’s that PDF that won’t open. That’s a local computer problem, but it finished the load much faster. Even for the cheapest, least performing storage that Azure has available, we were able to implement that and have acceptable load times for documents.

Again, the less frequently each file is accessed, the slower it will get over time, but only up to a point. I haven’t encountered anything yet where anything took more than five seconds to load, so really, some of that’s going to be dependent on your network and your local hardware anyway. That is the majority of the presentation. Just to walk-through what we did. I created a storage account in Azure. I stored credentials for that Azure share locally on the machine, on my on-premises machine, using a PowerShell script. Used another PowerShell script to mount that Azure storage account as a local share and also as a local drive.

Then, I didn’t demonstrate this, but I created a new OSM or even just a new structure of files. The same setup can be done via OnBase, via AppXtender, via IBM System Content. Any kind of content storage can leverage this because as far as this server is concerned, this is just a local disk, or at least as far as the application is concerned. There’s not really any reason. We aren’t investigating the even better performing and the even cheaper storage like blob storage or the table storage. The application would actually have to support that, and Perceptive supports Amazon S3 storage for that. They don’t support any Azure, OnBase support, none of it.

In other applications, it varies, but the way we set this up is something that’s absolutely doable for practically any Windows application. We set up a new OSM, which is just our object store, our file structure. We pointed out OSM at the share and then we migrated some documents over into that share. Now we have our documents available on the cloud. If I did that in en masse, I could reduce disk space on the local server, free it up for other purposes for where we need high performance, and even better, now this auto-scales.

I don’t have to necessarily manage my disks running out of space, because I don’t want to add a ton of disk space to this server unnecessarily because that could be used. It’s just unnecessary cost. By allowing the store to take place in Azure, I don’t have to have any more disk space than I actually need, and I virtually never need to consider adding disk space to this server ever again. I believe that there are cost benefits just from the cost of the service itself, as well as labor benefits to not having to manage your disk space anymore.

As far as considerations for how we make sure that documents are continually migrating to the cold storage, this could be something done through a scripted method. You could do it through Perceptive, you could do it through their retention tool, Retention Policy Manager. I believe OnBase has an equivalent. Other content applications, generally, have some sort of retention archive tool. Even if they don’t, or even if you don’t want to pay for it, this is very simple to do through some sort of scripting.

That’s it. I can now take any questions if anything has come up. I didn’t see any come in. I hope this was valuable. I would love to explore more of the Azure capabilities if there’s any interest. I believe that we support a lot of applications that are behind the curve and it’s really up to us to explore the best architecture and the best deployment methodologies to take advantage of these technologies that are available.

It would be ideal if I could take the Perceptive server and spin it up in a Docker container and deploy it in a server-less capacity and a platform as a service on Azure. Can’t do it, you have to have your windows virtual machine to do it. Until we get to that place, I think that we have to, again, push for the best strategies to get there. Okay, that’s it. Thank you for joining. I hope you’ll join us again in two weeks for our RPA webinar. Again, tons of content coming up. Thanks for joining and have a great weekend.

Want More Content?

Sign up and get access to all our new Knowledge Base content, including new and upcoming Webinars, Virtual User Groups, Product Demos, White Papers, & Case Studies.

Entire Knowledge Base

All Products, Solutions, & Professional Services

Contact Us to Get Started

Don’t Just Take Our Word for it!

See What Our Clients Have to Say

Denver Health

“RPI brought in senior people that our folks related to and were able to work with easily. Their folks have been approachable, they listen to us, and they have been responsive to our questions – and when we see things we want to do a little differently, they have listened and figured out how to make it happen. “

Keith Thompson

Director of ERP Applications

Atlanta Public Schools

“Prior to RPI, we were really struggling with our HR technology. They brought in expertise to provide solutions to business problems, thought leadership for our long term strategic planning, and they help us make sure we are implementing new initiatives in an order that doesn’t create problems in the future. RPI has been a God-send. “

Skye Duckett

Chief Human Resources Officer

Nuvance Health

“We knew our Accounts Payable processes were unsustainable for our planned growth and RPI Consultants offered a blueprint for automating our most time-intensive workflow – invoice processing.”

Miles McIvor

Accounting Systems Manager

San Diego State University

“Our favorite outcome of the solution is the automation, which enables us to provide better service to our customers. Also, our consultant, Michael Madsen, was knowledgeable, easy to work with, patient, dependable and flexible with his schedule.”

Catherine Love

Associate Human Resources Director

Bon Secours Health System

“RPI has more than just knowledge, their consultants are personable leaders who will drive more efficient solutions. They challenged us to think outside the box and to believe that we could design a best-practice solution with minimal ongoing costs.”

Joel Stafford

Director of Accounts Payable

Lippert Components

“We understood we required a robust, customized solution. RPI not only had the product expertise, they listened to our needs to make sure the project was a success.”

Chris Tozier

Director of Information Technology

Bassett Medical Center

“Overall the project went really well, I’m very pleased with the outcome. I don’t think having any other consulting team on the project would have been able to provide us as much knowledge as RPI has been able to. “

Sue Pokorny

Manager of HRIS & Compensation

MD National Capital Park & Planning Commission

“Working with Anne Bwogi [RPI Project Manager] is fun. She keeps us grounded and makes sure we are thoroughly engaged. We have a name for her – the Annetrack. The Annetrack is on schedule so you better get on board.”

Derek Morgan

ERP Business Analyst

Aspirus

“Our relationship with RPI is great, they are like an extension of the Aspirus team. When we have a question, we reach out to them and get answers right away. If we have a big project, we bounce it off them immediately to get their ideas and ask for their expertise.”

Jen Underwood

Director of Supply Chain Informatics and Systems

Our People are the Difference

And Our Culture is Our Greatest Asset

A lot of people say it, we really mean it. We recruit good people. People who are great at what they do and fun to work with. We look for diverse strengths and abilities, a passion for excellent client service, and an entrepreneurial drive to get the job done.

We also practice what we preach and use the industry’s leading software to help manage our projects, engage with our client project teams, and enable our team to stay connected and collaborate. This open, team-based approach gives each customer and project the cumulative value of our entire team’s knowledge and experience.

The RPI Consultants Blog

News, Announcements, Celebrations, & Upcoming Events

News & Announcements

The 7 Steps to a Successful ERP Implementation

Chris Arey2024-01-30T20:17:24+00:00January 9th, 2024|Blog|

2024 ACA Reporting Deadlines, Requirements, and Insights

Chris Arey2024-12-03T19:53:42+00:00December 12th, 2023|Blog|

5 Steps for Optimizing Your On-Premise to Cloud Migration

Chris Arey2024-04-18T14:54:37+00:00November 28th, 2023|Blog|

Unpacking Infor’s 2023 GL Report Designer Enhancements

Chris Arey2024-02-29T20:50:45+00:00November 14th, 2023|Blog|

What is Infor CloudSuite? A Flexible, Option-Rich ERP

Chris Arey2023-11-16T18:33:44+00:00October 31st, 2023|Blog|

High Fives & Go Lives

Upcoming Events

RPI Sponsors Lawson Winter Showcase

RPI Consultants2020-10-16T19:55:26+00:00March 24th, 2015|Blog, Virtual Events, User Groups, & Conferences|

RPI Will Attend 2014 Inforum as Silver Sponsor and Presenter

RPI Consultants2020-10-16T19:56:00+00:00March 24th, 2015|Blog, Press Releases, Uncategorized, Virtual Events, User Groups, & Conferences|

RPI Sponsors 2-Day Mid-West Lawson Mega Meeting

RPI Consultants2024-03-01T11:09:56+00:00June 19th, 2014|Blog, Virtual Events, User Groups, & Conferences|

RPI’s ImageNow Practice Attends UBTech Conference

RPI Consultants2024-03-01T11:12:19+00:00June 12th, 2014|Blog, Uncategorized, Virtual Events, User Groups, & Conferences|

RPI Attends MRLUG

RPI Consultants2024-03-01T11:02:10+00:00May 8th, 2014|Blog, Virtual Events, User Groups, & Conferences|