Migrating Enterprise Content & Data into the Cloud

Organizations that have implemented large enterprise content and business process management solutions understand the burden of data and content storage. From server and storage costs to ongoing support and monitoring, managing large amounts of content and data on-premises or through your virtual environments is time and resource consuming.

Find out how you can migrate your enterprise content and data, including your Perceptive Content OSM and INOW database, into the cloud. Cloud-based storage saves time and resources by off-loading time consuming tasks, monitoring, and issue management, while maintaining performance and system availability.

Transcript

John Marney:

Hi everyone. Thanks for joining us for another RPI Consultants webinar Wednesday. This is Michael and John part two.

Mike Madsen:

We’re back.

John Marney:

Today, this afternoon, we’re discussing how to migrate your data and your content into the cloud. This is going to cover a wide variety of cloud topics and expect this webinar to run a little longer than most of ours do, probably just over half an hour. So, buckle in, but I think we’ve got some really good material ready for you.

As far as other upcoming webinars: Next month on Wednesday, November 6th, we have a Kofax themed webinar series, What’s New in Kofax TotalAgility, 7.6? Subtitle: The Elimination of Silverlight. And then in the afternoon we have what’s new in Kofax ReadSoft Online. If you haven’t joined us for our office hours, that’s a different kind of webinar where we take a deep dive into a technical topic with hands on demonstrations, taking questions, and demo requests from attendees. So, we have one of those a month.

The October one is over perceptive content application plans and then the November office hours is over perceptive experience. Most of you know me. My name is John Marney, manager of the Content and Process Automation practice here at RPI. That’s us here in Kansas City. I have been doing this for around a decade now, as Michael reminded me just a few minutes ago, and that made me feel really good, and I do consider myself an AP automation guru.

Mike Madsen:

And hello, I’m Mike Madsen. I’m a lead consultant here primarily working with Perceptive Content Brainware programs and I work a lot with back office and higher education. As the office Dungeon Master, we’re hoping for a net 20 on this webinar.

John Marney:

Oh, yes. All right, so our agenda today, first we’re going to cover what is cloud? Once we know what it is, probably all of you know what it is, but we’re going to cover what it is in context for this discussion and we’re going to discuss how the cloud applies to your actual content and business process applications.

We’re going to cover a case study of something we’ve actually done. In this case, it’s Perceptive OSMs with a zero storage. However, that case study is pretty much something that can directly apply to a Kofax use case or an OnBase use case. And then we’re going to give you some strategies to get started with your cloud migration.

So, why should everyone hedge this webinar a little bit, Mike?

Mike Madsen:

Well, as everybody knows, cloud is constantly evolving. So, what we’re covering today is going to be as up to date information as possible that we can get today. But for all we know something could change tomorrow. So just always go to your service website to check stuff out, take a look at your documentation just to be sure.

John Marney:

And this information is true right now to the best of our ability. But not only do things change, but sometimes we get things wrong.

So, let’s jump in. What is cloud? What is cloud? What if I told you that the cloud is just someone else’s computer, or probably more appropriately a whole bunch of people’s computers? So, first let’s talk about the infrastructure that actually goes into cloud and you’re hearing this for the first time ever.

This is a John Marney certified marketing term. I Googled it. Nobody else has done this before. So, I’m breaking the cloud down into two different types of implementations. The first is your bright cloud and this is really the best version of cloud. And this means that you’re taking everything you have today and really re-envisioning it and reapplying it into the cloud. So, it takes your data and your files and your applications and distributes them across multiple servers and data centers.

That’s largely driven by a Linux backend. Though you would never know it because the traditional servers, file systems, et cetera, don’t exist. There’s nothing for you to go manipulate and drag and drop and there’s no control panel in a windows server, right? So, it’s less a specific application support. You’re not going to find, for the most part, OnBase 18 supported as an Amazon web service.

It’s mostly web-based services that can be utilized in connection with each other for a series of services. So, it is a service-oriented architecture. And then you have the dark cloud. Dark cloud is actually basically just what you’re doing today, but on someone else’s hardware. It allows you to spin up Windows or Linux virtual machines to run whatever you want. And as far as your files and storage are concerned, you can do blobs, which some applications support today, you can do your traditional file directory system. And this is what actually allows you, for the most part right now, to map that cloud storage to your on premises traditional file systems and directories.

So, what are the technologies involved? And this is mostly bright cloud versus dark cloud. So, you’ve got blobs vs file shares. So blobs are actually in support of a lot more different file types, file structures, data types. It’s not just files. File shares are your more traditional file system, like what you’d find in Windows. Whichever it is, if you want to map it to a Windows file system, like a macro Z drive out to a zero file share, it must support a technology called SMB 3.0, and on top of that there are a number of options whether you’re using blobs or file shares. So, the three main pieces are going to be your replication, your performance, and your location.

Mike Madsen:

This is generally one of the easier first steps into cloud if you’re trying to migrate that direction.

John Marney:

Absolutely. You want to look at your files first. So, the replication is how redundant is this data? In all of them, they began at 99.999, like six nines, and it’s really deciding how many decimal places you want after that. So, it goes 6, 11, 16, something like that.

Performance-wise, we’re talking generally about hot versus cold storage. Cold being something that may take longer for the services to retrieve. Hot meaning it’s meant to be more readily available. And then the location is the region or where the actual data center exists. But it can also mean how redundant is that data within a given data center or region? So that if they did suffer some sort of hardware failure or loss, how quickly can that data be retrieved?

Next, you’ve got cloud databases versus traditional databases. SQL server or Oracle.

Mike Madsen:

Yeah. The cool thing about cloud databases is that we’re no longer talking about just a standard database table, where you may store just string values or something like that. Cloud DBs actually allow us to place objects in specific locations where we can access those, even applications. So, it’s really interesting what you can get into with the cloud DB.

John Marney:

Yeah, you really could store tables inside of tables inside of the tables. It’s all generally structured in…so, it’s not really a traditional relational database. Though, you can structure it to act like that or really act just like that. And there is some ability to migrate existing SQL databases into a cloud database.

So, for example, Azure offers the cosmos DB. There is a path for that, but that may not necessarily be the wisest way to implement. What we’re going to talk about a little bit later is a hybrid approach that allows you to use your traditional SQL servers and utilize some database services. And then lastly you have your cloud services versus just virtual machines.

So, the cloud services seek to just fill gaps or replace specific components inside your organization. So, we’re talking about things like web app development and app hosting. Your visual studio work could all take place in the cloud. Data management and access management, so things like your active directory. Whereas your virtual machines really are meant for more specific applications that are meant to run on a Windows server or Linux server. And there are more advanced services available, such as there’s a connect API for your mixed reality, there’s internet of things, and there’s even the hottest trend in cloud technology, Blockchain. And we know what that is.

Mike Madsen:

The nice thing about cloud services is that it’s something that if you have…if we’re talking about a back-office situation and you have approvers that are on the go, that makes it much easier for them to just log in wherever they’re at to do what they need to do.

John Marney:

Absolutely.

So, there’s a ton of providers out there, right? So, we break these down into three tiers. You’ve got your pure commercial, which are the big players, Amazon, Microsoft, Oracle, IBM, whoever else at this point. Everyone’s trying to get into it because there’s really money to be made there. There’s your mixed consumer and commercial, Box, Dropbox, whatever, Google Drive. I will definitely say that I wouldn’t want to discount these as business solutions. In many cases, providers like box are our go to for business solutions and they actually can be easier to stand up and integrate with than the pure commercial services.

And then you have private providers. These typically are all utilizing the commercial providers. They’re just hosts for Amazon or people who can help you manage it. But they’re adding services, SLAs, things on top of that to assist you with it. So, let’s walk through some strategies on the layers that this can be implemented.

Mike Madsen:

Yep. There are a lot of different layers but keep in mind whenever we talk about this, it’s a little confusing whenever you talk about cloud because you have to split it up between what works best for you. So, obviously in a perfect world we would want to move to what we’ll get to, the Layer Four – Enterprise, where we want everything on the cloud. But that just doesn’t work in every scenario.

So, starting in layers or looking at it at layers makes it a little less overwhelming when you’re considering moving to the cloud with your business. So, for layer one, that would just be the standard file storage. And this hearkens back to a few a couple slides ago when we talk about some of the different cloud offerings. So, file storage is just going to be like if we’re running perceptive content where we can move our own OSMs so that we no longer have those internally on a local server. We have that stored somewhere else so that we can be sure that those are backed up and we don’t ever have to worry about losing those and we always have access to them.

John Marney:

Right, so if I wanted to give this a moniker, I would probably call it storage as a service.

Then layer two is really just taking that storage to the next level. It’s giving you the redundancy and the backup security and applying it to your data in your databases. So, that’s your next largest storage need and it will also give you some improved disaster recovery. So, this is layering data as a service on top of your storage as a service.

Mike Madsen:

Then with applications, that’s just essentially those hosted applications we talked about it a moment ago. So, we can link that in with active directory. Everything that you need to log in and run your applications. No need to spin up your VMs and run through those every single time anymore. We can just connect to it through URL.

John Marney:

And here’s where you can reduce the need for your on-premises virtual machines if nothing else, but really reduced the need for virtual machines all together and infrastructure that you manage. But we also want to begin using some more of those actual cloud services available. Email, through Office 365 is a perfect layer three application. Active directory, domain controllers, et cetera, can all exist now in a purely cloud-based format.

Mike Madsen:

And layer four just pulls all of those together. So our enterprise, our series of applications, everything that we’ve got on our system is now moved to the cloud. We’re using that as a complete product for our business. So that’s really the gold standard of moving everything to an enterprise type cloud solution.

John Marney:

Absolutely. And you’ll find that your current business applications may not translate to layer four and it will require some architectures, some reinvention, but also these providers know this and they’re beginning to work on layer four applications of their own.

Okay, so what we’ve talked about is cloud technology in general. It does not apply specifically to the Hyland, the Perceptive, or the Kofax. However, we are going to run through the things that they support specifically because that is where most of our clients are coming from. And you’re going to find that most of them are the same. It’s not super advanced, but there are some intricacies.

And grandma’s going to ask us, “Cloud computing? Computers can fly?” “No. No, grandma.” What Perceptive Content can do is via the Hyland Cloud, they offer a service to host everything for you. On top of that is their own share base offering, which is hosted through Hyland Cloud. This is for more distributed file sharing instead of within application. Keep in mind, the Hyland Cloud is basically a tier three offering that they’re presenting as tier four. So, it’s still just a virtual machine. The same thing that you could run on premise.

Mike Madsen:

The nice thing about perceptive content is that they also allow us to use these external OSM plugins. So, using Amazon S3 we can take all of your OSMs, place them on the cloud, so that you can then reference those through your cloud service instead of keeping them internally on your local machine. Kind of like what we mentioned before.

John Marney:

And this is probably one of the biggest ones to call out because Amazon S3 is an actual tier three cloud service and not just a traditional file structure that’s on someone else’s machine. So, this allows you to take advantage of the best redundancies, the best speed, and everything that Amazon or the cloud have to offer.

Mike Madsen:

We talk a lot about disaster recovery and what are we going to do if we lose our OSMS? Well, another thing to consider is just think about the maintenance aspect around having to keep an eye on those drives to make sure that the drives aren’t getting too large or anything like that. Once you move everything to the cloud, now all you have to do if you need more space is just buy more space. So, it’s really-

John Marney:

And it can happen automatically.

Mike Madsen:

Yeah. From a maintenance perspective, it’s much easier.

John Marney:

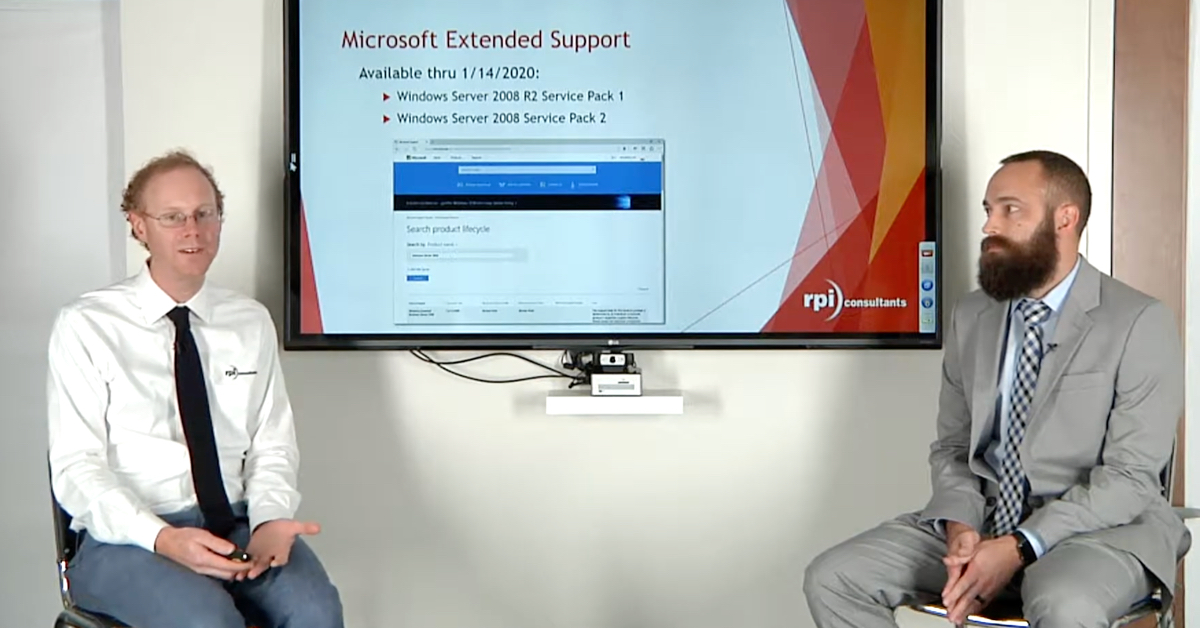

So, for OnBase you really have the same kind of thing. No cloud services supported yet on the community portal. They have stated that you can probably make it work on an Azure or Amazon VM, but they’re not going to support you. Spoiler alert, it works. However, they do also, just like Perceptive, offer OnBase on their own hosted environments called Hyland Cloud service.

Kofax TotalAgility is a little bit different. So again, Kofax offers this hosted on their hardware, but they do support you implementing this on Microsoft as your virtual machines.

Mike Madsen:

Mm-hm (affirmative), and it’s not really specifically called out in the documentation, but we have had success with using AWS with Kofax TotalAgility.

John Marney:

And finally, we throw this slide in here just because we work with it a lot and we want to present the information to you: Kofax RPA. Robotic Process Automation really is one of the things that doesn’t make as much sense as a cloud service instead of a cloud platform. So, ultimately it needs a web browser to work most of the time, which means it needs to be installed on an operating system. So, if you’re not running it in Windows server, you’re probably doing yourself a disservice.

Mike Madsen:

And we put some information here as to which OS’s these work with, but there’s a lot of pieces of RPA. So, all we’ve displayed here is Design Studio and Roboserver. But just to take a look at the documentation to get a complete picture.

John Marney:

I will say though, that running RPA on a cloud virtual machine is just about the most perfect implementation that I can think of. And the reason for that is you could actually have the robot go add processing power to the server as needed. So, you’re only ever paying for what you need and not ever anymore. Which would actually be really cool.

All right, so we’re going to jump into a very specific use case. We’re going to walk through the reasons for doing this, the challenges that this organization faced, our approach and our implementation, and some of the results we saw including some pricing on actually migrating, in this case, a big chunk of our perceptive object storage out to an Azure storage account.

So, we have a series of challenges facing this organization. The first thing was that we are dealing with an organization that has a high volume of documents. So, when I say high volume, I mean three million documents a year or so coming into their Perceptive platform. And that’s not even including multiple pages per document. You definitely don’t need that high of a volume to see this as a wise thing to deploy. But that definitely helped us find ROI faster.

And one of the biggest things that caused this to actually have a cost to begin with was we’re constantly having to manage our disk space. So, you don’t want to throw a whole bunch of extra terabytes onto the perceptive storage drives because that’s wasted space that you could be using for other things the discs may not be that expensive, but they still cost something and they cost something to maintain.

So, we’re frequently adding trunks of disc space multiple times a year to keep up with the growth of the storage matrix. Additionally, that actually costs somebody time to monitor and manage and the labor to actually rollout changes to the SAN to accommodate Perceptive’s displaced growth. On top of that, we have retention policies.

Mike Madsen:

Yeah, and if anybody has set up a retention policy in Perceptive Content, you know that it seems not complicated, but at the end of the day when you’re actually testing it, it can be pretty frustrating. But if we’re storing everything on the cloud anyway, it makes it a little bit easier to just point out specifically the data we no longer need anymore. You can just automate it that way.

John Marney:

So, you may know that you want to delete all your documents that are seven years and older, but you also may know that every document older than two years is never accessed and doesn’t need to be on this expensive high-speed storage that we have attached to our Perceptive Server. So, this is another reason why we could introduce some savings to our storage and push this out to something cheaper. So, as far as our design, how we approached the solution to this problem, we had selected Azure…honestly, it was mostly because of our familiarity with it, just from being Office 365 people ourselves. I had gone and poked around Azure previously just to see what it offered. Most of what we did, you could do on Amazon or any other provider. But there are some cool things that Azure does or at least has on their roadmap that others may not ever really be able to replicate. And we’ll talk a little bit about that.

So, the Azure options that we talked about for our storage account in Azure, first was hot versus cold. Again, we talked about that a little bit and that’s the speed at which they’re promising to deliver retrieved documents to you. For this case, I actually used cold storage, and in my testing, I was going to flip it to hot if it was too slow or didn’t work, but the cold storage really worked just fine and there was no noticeable difference.

So, it probably would be slower the longer it sits in cold storage and not retrieved, but that’s really okay, right? That’s the point. As far as redundancies, it just depends on the type of documents you’re storing, how redundant you need that data for your own business purposes. Most of the time these aren’t mission critical documents, especially if they’re two years old or more. So, as long as that is there, and it is as part of cloud, then you should be good with the most basic setup, which is what we use.

As far as geolocation, again, that’s where is it, where’s the actual data center located. This was in the Eastern United States, so there are multiple data centers in Eastern United States. We picked the one closest to our client and finally this is an option, which we didn’t utilize, but you can set a quota on the storage account if you’re worried about some sort of spending amount per month. So you can set it as per number of gigabytes that you want it to use. It can notify you when you’re approaching it, all that kind of stuff.

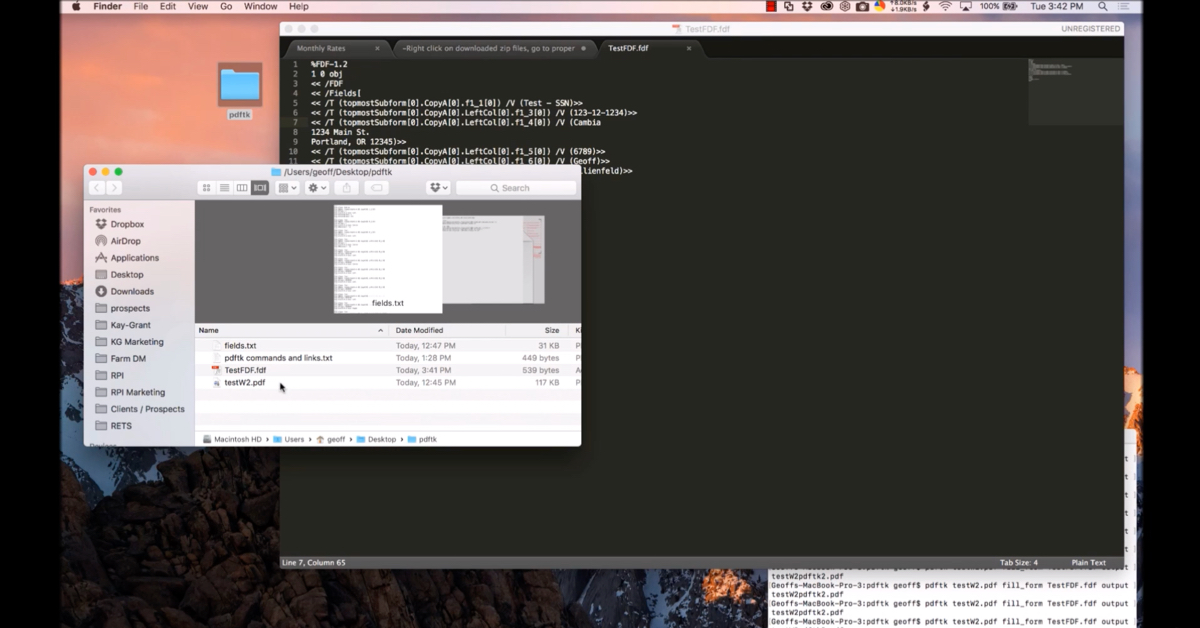

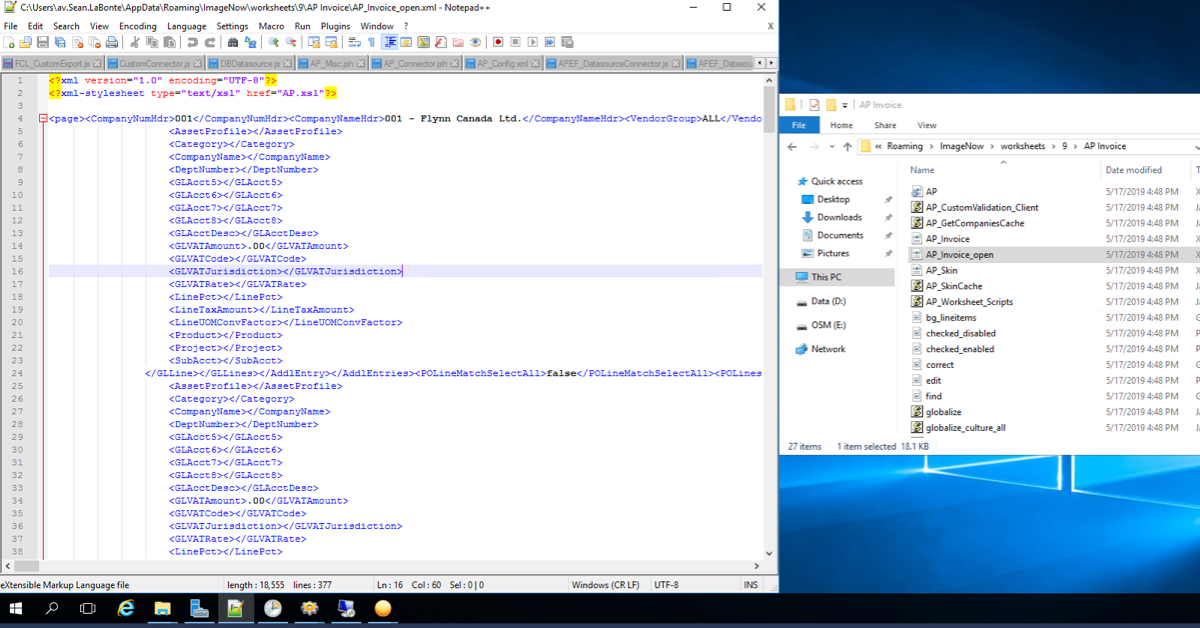

So, on the Windows side then, right, we had our storage account set up. I could actually login via my Azure account and drop a file in there if I wanted. And this one again is a actual storage account that supports…it’s a file…what do they call it? I can’t remember. File storage?

And it supports that SMB 3.0 protocol that we talked about. Because the next thing we have to do is, on the Windows server side where perceptive content is installed, we actually need to map a drive, z drive, I think is what I used, to the Azure share. And the way I did that is there’s a couple PowerShell scripts that I tweaked to just provide my login information and to map that share down to the server. And that way I can navigate directly to those files from the server itself and if I can see it, that means perceptive server can see it.

Mike Madsen:

Yep. And once you have all of that set up, you’re just down to going into In Tool, creating your new OSM trees, and pointing to the new directory.

John Marney:

You do have one option. So, we had set it up initially just so that files stored into that OSM would apply out to the file share and that’s great. You can use a number of different methods to get those documents in there. You can set into a command documents from a certain drawer moving out there. But you could go ahead and run a pretty simple iScript, or a batch file even, to run an InTool command to actually just migrate documents out to Azure. You could push everything you have out there very easily and it’s just a single migration.

Mike Madsen:

And for people with terabytes of OSMs out there, think about how much space you could save just by doing that.

John Marney:

So, we had some great results. I tried to get some real metrics to, to throw in this slide, but I was not even able to notice a difference between cloud versus on prem retrieval. So again, over time using cold storage, that retrieval mechanism may slow down a little bit. However, just in pure timing, I couldn’t a difference between retrieval upfront. As far as maintenance, there’s really virtually none. And the great thing is that it moves from a very technical storage mechanism maintenance to more of a front-end maintenance.

So, you do have to make sure that Azure has a credit card, it can bill and that credit card can expire and all this things. But that’s managed through account alerts and everything else. And you also do have to monitor your retention policies, make sure documents are actually moving out to the share and you’re utilizing this. But again, that’s a front-end application mechanism.

Mike Madsen:

Yep. And for our ROI, we can eliminate some of the stuff that we’re needing to do internally, like having that person come out to put in the new hard drive or the people we pay to migrate that information. Now that Azure has taken care of all of that for us, not only does it bring a huge cost benefit, but it’s pretty heavy on piece of mind as well.

John Marney:

And the backups could be their own savings. If you moved your entire storage matrix out to a cloud file storage, you don’t really even need to take backups. You might still want to take local backups every once in a while, but you don’t need to do it as often, and the incrementals would be practically useless. Because I believe there are stats showing that local backups are more likely to be destroyed or fail than cloud backups, even in the event of major disaster. So, ultimately there’s a lot of hidden savings as well. And again, the time. It’s the time of the people who typically have to maintain this. So, Michael…

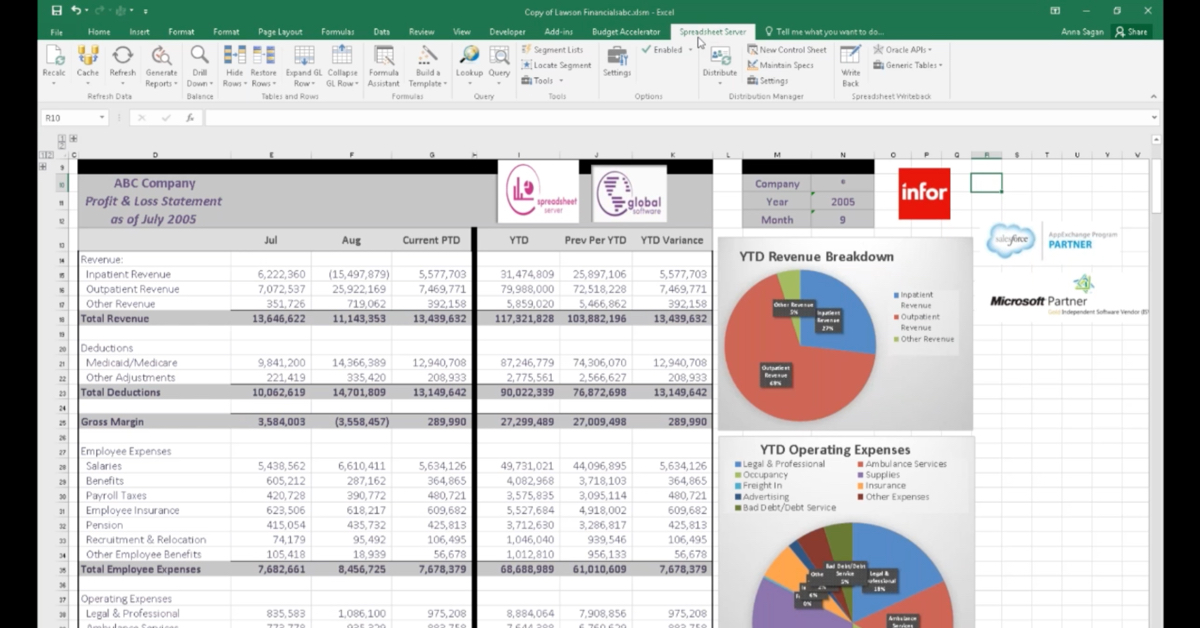

So, results. Okay. So, we’ve got some details. So, for the licensing, you do have to have an Azure subscription. It is a free account and you can do a lot for up to 12 months to poke around some of the free services. For the paid services, like a storage account, it’s free for 30 days and you get up to $200 credit, which would be more than enough to set it up and test it. And the storage, with all the options I selected that I talked to you about, comes in at 6 cents per gigabyte per month. So, if you translate that out to actual document volume, that’s going to be roughly $3 per month per one million documents.

Mike Madsen:

Which is crazy.

John Marney:

It is crazy. Translated again to the client in question here. You’ve got three million documents a year, so that’s $9 a month times 12, $36 a year for this cloud storage.

You’re definitely paying more than that for their time.

Mike Madsen:

Or even just a hard drive.

John Marney:

Or for a hard drive. You can take that to 10 years and probably pay for a hard drive. And there are additional transaction fees that apply to these and they’re extremely low. It’s something like another six cents per 10,000 transactions. And for many of the storage types, read activity is not considered a transaction, which is most of what you’re doing. Only write activity is considered a transaction. So, that’s negligible.

Optionally if you don’t own it already, for Perceptive anyway, the retention policy manager or similar, something to actually manage the move of these documents over time out to cloud storage, would be really useful. But you don’t need it.

Mike Madsen:

And we’ve talked about a lot of different products here, so it’s hard to give it an exact estimate on each one, but we figure around 60 hours of consulting time to do some migration to the cloud should pretty well cover most things.

John Marney:

And as far as expansion opportunities, things you can do in addition to what we’ve talked about here with the account you already have set up, so you could easily roll additional document types, departments, processes, anything with those retention policies you can push more and more and more out to the storage account in Azure. You can actually move all of your storage to Azure, including Config, everything else.

One cool thing, the hybrid approach for SQL server that we talked about a little bit earlier, it’s called stretching your SQL server. And what that does is SQL server actually has features built into the Management Studio that allow you to utilize the Azure web services to push your data out there. And it’s all very tightly integrated and easy to set up so that you can actually utilize the functionality of your on-premises SQL server, but the benefits of the online cloud storage.

And finally, once all of that is done, we move our servers out there. Spin up a few virtual machines on Azure and the integrations are easy because it’s already on the same domain. Okay, so we’re going to give you a few strategies on how to do this for yourself. So, take it in phases. You don’t have to, but I think most IT changes are most consumable in phases. And this is really just a replication of the slide we showed earlier.

Phase one is to get your files and your massive storage out into the cloud. We recommend this as a first phase specifically because it really is just space. As long as the files are accessible, you don’t really, for the most part, have to think about things like technical specifications. You don’t have to think about, “Will my application run? Does it need to dependencies? Do I need to use other web services?” So, it’s easy. And it’s also one of the things that might take the longest purely from a migration standpoint. Not an overall implementation standpoint, but you may need a weekend or a week to move a whole bunch of files.

Phase two then is after the biggest chunks of files are moved, other data and databases into the cloud services or cloud storage. Again, this is really just an extension of phase one but we are going to take advantage of all the redundancies, the disaster recovery benefits, that are available.

Mike Madsen:

Yeah. Phase three is when we really start to begin to use cloud services for isolated business functions inside of the business. So, move whatever you can to the cloud, especially those that are utilizing data that we’ve already moved to the cloud. And then that saves you enough time so that you can start planning out how to move everything there.

John Marney:

Right, so with phase three you may not be able to rip out OnBase and utilize a web service that can do all the same things, but you could get it into a virtual machine and begin to use links using some more isolated functions that are available within the platform to reduce the reliance on those platforms.

And yeah, ultimately the goal should be, if you are committed to a cloud-oriented strategy, sunset the virtual machines that you’re using for applications, whether it’s on premise or in a cloud.

So, a few considerations as far as security that you need to be aware of as you’re approaching this. From a user access standpoint and account authentication, you do have to have an actual Azure account. So, that means when our perceptive server in our case study actually goes to retrieve files from the Azure storage account, it does have to do to actually use a token stored on the server with a [email protected], or something like that, credentials.

And as of right now, it’s either on their roadmap or freshly implemented. But you cannot use active directory that you have on premise, credentials on these file shares. So, you can’t take [email protected] and apply permissions to the Azure account. But this is something that’s cool because it’s all Microsoft, is that this is something they’re going to implement if they haven’t very recently. Whereas, it’s not clear whether someone like Amazon would really even have the ability to do that. But on top of that, if you have moved your active directory into Azure services, then you can add those users into the storage account. So, that becomes much easier.

Mike Madsen:

And then obviously when we talk about network, if everything’s on the cloud, we have to have internet access. So, just be sure that you have everything set up with the VPN, you’re secure, and check all of your ports because you may have conflicting port values.

John Marney:

It is important to note that anything you set up, whether it’s a VM or a storage account or a service, in Azure or any other cloud provider, can have a bunch of privacy and security layers applied to it. So, you can make it public so that it can be accessed from anywhere or anyone with the right authentication. You can make it semi-private, which I believe would be basically white listing IPS that it allows to connect, and you can make it completely private, which locks it down to basically a VPN pipe.

And then finally you have to consider the actual application services themselves. So, if you wanted to roll this out in your perceptive environment, your OnBase environment, your Kofax TotalAgility environment today, each of those on Premise run with certain permissions under a certain service account, or what have you. Whatever runs that application ultimately will need a way to utilize the credentials or the account authentication out to that file store. Mostly this is done through the token that I described, but that is something that has to be applied before it will work.

So, I know that was a lot. I feel like that was a lot. I’ve been talking a lot. My throat is sore. If you have any questions, please feel free to throw them out. And use the Go-To-Webinar Questions Panel, if you have anything, please type them in there.

In summary, we’ve talked about what is cloud, some differences between different cloud applications. We’ve talked about how the cloud actually applies and technical specifications for the business applications that you are probably most commonly here for. We went through a case study of us actually implementing object storage in Azure storage account, and we talked about some strategies to get started yourself. So, we’ll take any questions that there might be.

We’re just really good explainers. All right, so we have upcoming webinars. Again, just as a reminder, next month our office hours are really cool. We get a lot of questions and I think there’s some really good knowledge sharing that takes place during those for our Perceptive. On our roadmap for the office hours, we do have Kofax and OnBase topics as well.

If there’s anything that you would like to see a webinar or office hours or anything else presented on that we can put together for you, we’re happy to do so. So, please just reach out. Let us know something that you’d like to hear more about. If you’d like to hear more about this topic, let me know. And there’s a little bit about RPI Consultants if you don’t know anything, but we are a full professional services practice of well over a hundred people, at this point. And we are partners with Infor, Kofax, and Hyland.

So, thank you for joining us and have a good afternoon.

Mike Madsen:

Thank you.

Want More Content?

Sign up and get access to all our new Knowledge Base content, including new and upcoming Webinars, Virtual User Groups, Product Demos, White Papers, & Case Studies.

Entire Knowledge Base

All Products, Solutions, & Professional Services

Contact Us to Get Started

Don’t Just Take Our Word for it!

See What Our Clients Have to Say

Denver Health

“RPI brought in senior people that our folks related to and were able to work with easily. Their folks have been approachable, they listen to us, and they have been responsive to our questions – and when we see things we want to do a little differently, they have listened and figured out how to make it happen. “

Keith Thompson

Director of ERP Applications

Atlanta Public Schools

“Prior to RPI, we were really struggling with our HR technology. They brought in expertise to provide solutions to business problems, thought leadership for our long term strategic planning, and they help us make sure we are implementing new initiatives in an order that doesn’t create problems in the future. RPI has been a God-send. “

Skye Duckett

Chief Human Resources Officer

Nuvance Health

“We knew our Accounts Payable processes were unsustainable for our planned growth and RPI Consultants offered a blueprint for automating our most time-intensive workflow – invoice processing.”

Miles McIvor

Accounting Systems Manager

San Diego State University

“Our favorite outcome of the solution is the automation, which enables us to provide better service to our customers. Also, our consultant, Michael Madsen, was knowledgeable, easy to work with, patient, dependable and flexible with his schedule.”

Catherine Love

Associate Human Resources Director

Bon Secours Health System

“RPI has more than just knowledge, their consultants are personable leaders who will drive more efficient solutions. They challenged us to think outside the box and to believe that we could design a best-practice solution with minimal ongoing costs.”

Joel Stafford

Director of Accounts Payable

Lippert Components

“We understood we required a robust, customized solution. RPI not only had the product expertise, they listened to our needs to make sure the project was a success.”

Chris Tozier

Director of Information Technology

Bassett Medical Center

“Overall the project went really well, I’m very pleased with the outcome. I don’t think having any other consulting team on the project would have been able to provide us as much knowledge as RPI has been able to. “

Sue Pokorny

Manager of HRIS & Compensation

MD National Capital Park & Planning Commission

“Working with Anne Bwogi [RPI Project Manager] is fun. She keeps us grounded and makes sure we are thoroughly engaged. We have a name for her – the Annetrack. The Annetrack is on schedule so you better get on board.”

Derek Morgan

ERP Business Analyst

Aspirus

“Our relationship with RPI is great, they are like an extension of the Aspirus team. When we have a question, we reach out to them and get answers right away. If we have a big project, we bounce it off them immediately to get their ideas and ask for their expertise.”

Jen Underwood

Director of Supply Chain Informatics and Systems

Our People are the Difference

And Our Culture is Our Greatest Asset

A lot of people say it, we really mean it. We recruit good people. People who are great at what they do and fun to work with. We look for diverse strengths and abilities, a passion for excellent client service, and an entrepreneurial drive to get the job done.

We also practice what we preach and use the industry’s leading software to help manage our projects, engage with our client project teams, and enable our team to stay connected and collaborate. This open, team-based approach gives each customer and project the cumulative value of our entire team’s knowledge and experience.

The RPI Consultants Blog

News, Announcements, Celebrations, & Upcoming Events

News & Announcements

Jeff Brewster Joins RPI Consultants

RPI Consultants2024-03-01T11:00:42+00:00November 18th, 2013|Blog, Our People are the Difference, Press Releases|

RPI Acquires Aster Group Lawson & Kronos Practice

RPI Consultants2024-03-01T11:03:09+00:00November 7th, 2013|Blog, Press Releases|

RPI Consultants Accepts Future 50 Award

RPI Consultants2024-02-26T06:24:09+00:00January 28th, 2013|Blog, Our People are the Difference, Press Releases|

RPI Expands ECM Practice

RPI Consultants2024-02-26T06:18:57+00:00January 10th, 2013|Blog, Our People are the Difference, Press Releases|

RPI Consultants Honored with “Future 50 Award”

RPI Consultants2024-02-26T06:23:02+00:00January 7th, 2013|Blog, Press Releases|